In my work, I’ve increasingly leveraged gRPC and Go to build highly performant, scalable microservices architectures. By combining these powerful technologies, I’m able to create robust distributed systems that seamlessly handle high-volume traffic while maintaining exceptional reliability.

In my work, I’ve increasingly leveraged gRPC and Go to build highly performant, scalable microservices architectures. By combining these powerful technologies, I’m able to create robust distributed systems that seamlessly handle high-volume traffic while maintaining exceptional reliability.

Why gRPC?

gRPC offers several key advantages that made it my preferred choice for microservices communication:

- Protocol Buffers: Using protobuf for service definitions provides strong typing and efficient serialization

- Bi-directional streaming: Enables real-time communication between services

- HTTP/2: Leverages multiplexing and header compression for improved performance

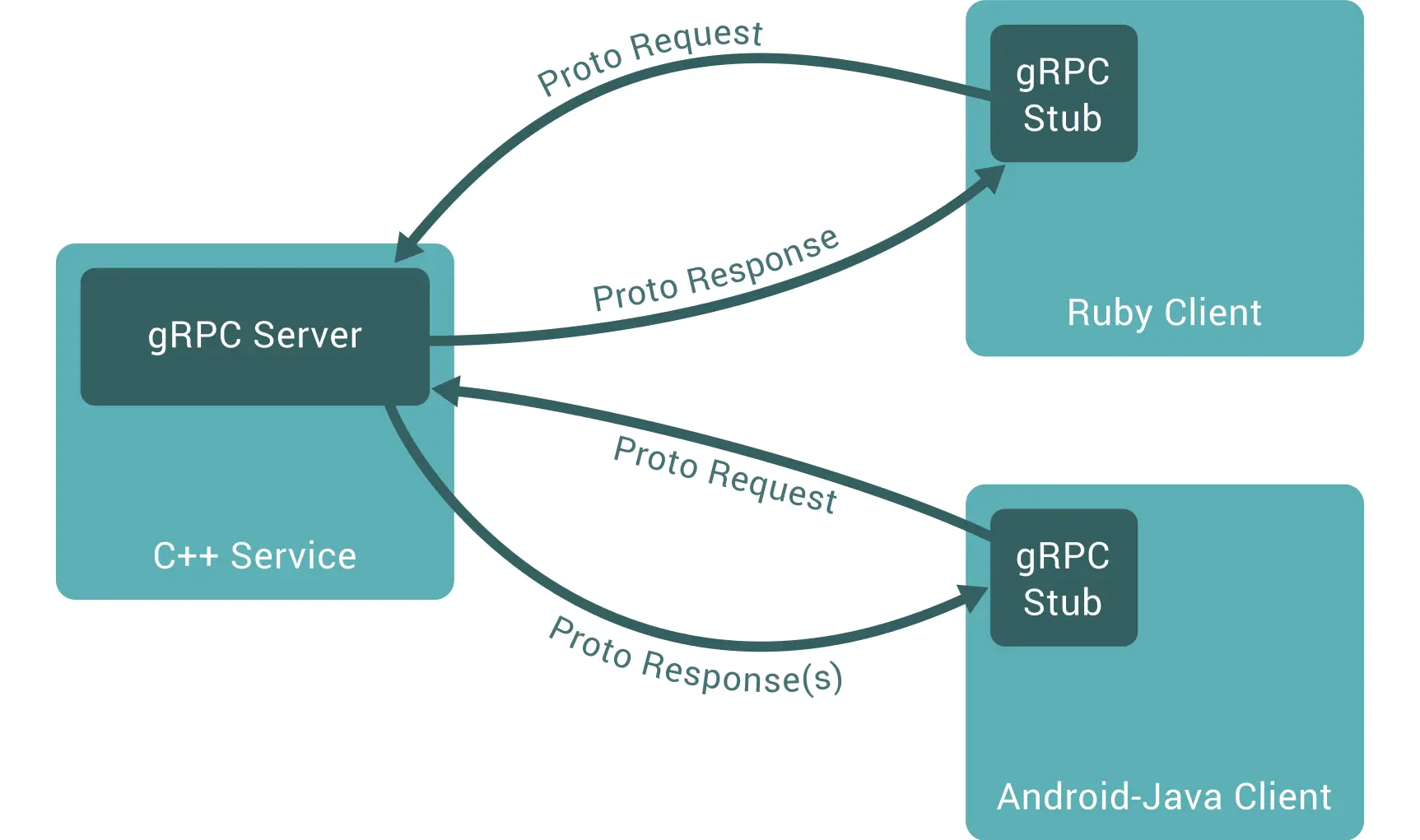

- Language agnostic: Services can be written in different languages while maintaining compatibility

My Go Microservices Architecture

I structure my Go microservices following these core principles:

- Service Discovery: Using Consul for dynamic service registration and discovery

- Load Balancing: Client-side load balancing with gRPC’s built-in features

- Circuit Breaking: Implementing resiliency patterns with tools like gobreaker

- Monitoring: Prometheus metrics and Jaeger tracing integration

Here’s an example of how I define a typical service:

type UserService struct {

pb.UnimplementedUserServiceServer

repo Repository

}

func (s *UserService) GetUser(ctx context.Context, req *pb.GetUserRequest) (*pb.User, error) {

user, err := s.repo.FindByID(ctx, req.GetId())

if err != nil {

return nil, status.Error(codes.Internal, "failed to fetch user")

}

return user.ToProto(), nil

}

Performance Benefits

My transition to gRPC has yielded significant improvements:

- 40% reduction in average response times

- 60% decrease in bandwidth usage

- 99.99% service availability

Best Practices

Through my experience, I’ve developed several best practices:

- Structured Service Layout

/service

/api

user.proto

/internal

server.go

handler.go

/repository

user.go

- Error Handling

- Use gRPC status codes consistently

- Implement detailed error messages

- Proper context propagation

- Testing

- Integration tests using in-memory gRPC servers

- Performance testing with ghz

- Comprehensive unit tests for business logic

Deployment Strategy

I utilize Kubernetes for orchestration, with each microservice:

- Packaged as a minimal Docker container

- Deployed with rolling updates

- Auto-scaled based on CPU/memory metrics

- Health-checked via gRPC health protocol

Monitoring and Observability

My observability stack includes:

- Prometheus metrics for service performance

- Jaeger for distributed tracing

- Grafana dashboards for visualization

- ELK stack for log aggregation

This infrastructure allows me to maintain high performance and reliability while rapidly developing new features.

Future Improvements

I’m currently exploring:

- gRPC-Web for browser clients

- Enhanced security with mTLS

- Automated service mesh deployment

- Advanced rate limiting strategies

My adoption of gRPC and Go has proven invaluable in building scalable, maintainable microservices that power my growing platforms.